AI Is Not Your CTO, It Needs Instructions

AI coding assistants promise speed, but often fall short without structure. Learn why PRD-to-Code beats vibe coding for building reliable, scalable software with AI.

Read moreInsights, tutorials, and thoughts on AI, technology, and innovation. Exploring the craft of building intelligent systems with purpose.

AI coding assistants promise speed, but often fall short without structure. Learn why PRD-to-Code beats vibe coding for building reliable, scalable software with AI.

Read more

Explore Agent Contracts, a framework for defining, verifying, and certifying AI-driven systems to ensure reliability and alignment with human intent.

Read more

Compare Nuvi, n8n, RelevanceAI, OpenAI GPTs, LindyAI, and Lovable to find the easiest, most human-centric AI agent platform.

Read more

Discover how to build autonomous AI agents using LangGraph, CrewAI, and OpenAI Swarm. Compare features, architectures, and use cases to choose the right framework for your needs.

Read more

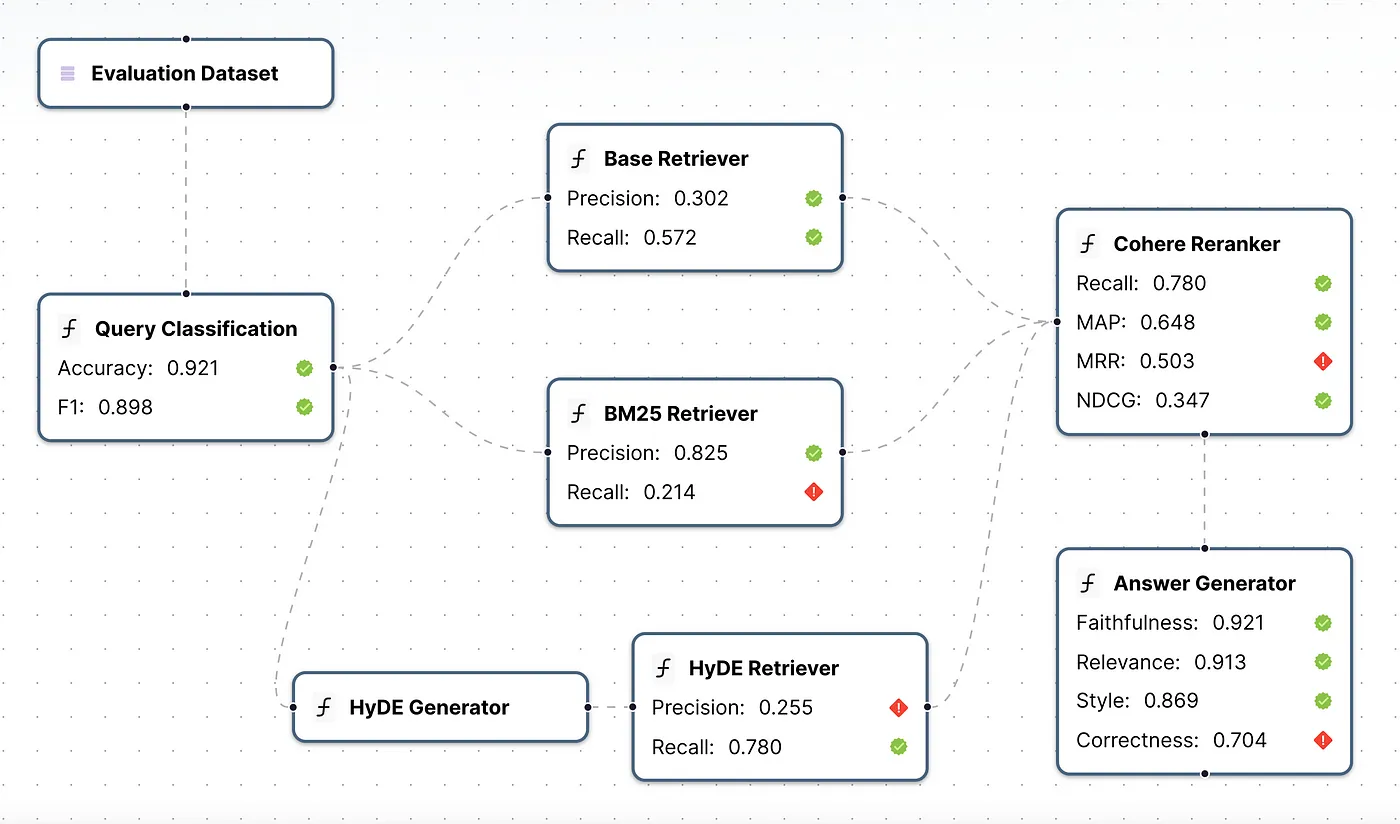

Explore how to systematically benchmark Retrieval-Augmented Generation (RAG) systems using synthetic data. This case study walks through building RAG systems, generating synthetic datasets, and evaluating performance to make informed design choices. Learn how to use vector stores, keyword-based retrieval, and hybrid methods to improve your LLM applications.

Read more

Discover the advantages of using synthetic data for LLM testing and evaluation. Learn how to generate and utilize synthetic datasets, explore real-world examples, and understand the challenges of maintaining quality. Improve your AI system\

Read more

Discover a comprehensive method to evaluate complex GenAI applications by breaking down each module and step in the pipeline. Learn how to gain granular insights, tailor auto-evaluators, and use continuous-eval for detailed performance metrics and tests. Enhance your AI application evaluation process with our step-by-step case study and practical examples.

Read more

Learn how to effectively evaluate LLM pipelines using both reference-free and reference-based metrics. This guide provides insights into quick and partial evaluations using reference-free methods, and demonstrates how to achieve comprehensive and reliable assessments with reference-based metrics. Understand the strengths and limitations of each approach, and discover how to implement a balanced evaluation strategy to optimize your LLM pipeline performance.

Read more

Explore three essential methods to evaluate LLM pipelines: reference-free, synthetic-dataset-based, and golden-dataset-based evaluation. Understand the pros and cons of each approach and learn how to implement them for quick, consistent, and comprehensive insights. Start with reference-free and synthetic-dataset evaluations for fast results and build a robust golden dataset over time for the most reliable assessment. Enhance your LLM pipeline\

Read more

Discover how to evaluate different aspects of LLM-generated answers for optimal performance in Retrieval-Augmented Generation (RAG) applications. Learn about holistic and aspect-specific evaluation techniques, including correctness, faithfulness, relevance, logic, and style. Explore various metrics, from deterministic to semantic and LLM-based, and understand their correlation with human judgments. Find out how to use ensemble models and hybrid pipelines to balance cost and accuracy, ensuring your RAG system meets user expectations. Get practical insights and tools to enhance your evaluation process and improve LLM outputs.

Read more

Explore the complexities of creating effective Retrieval-Augmented Generation (RAG) systems for LLMs. Learn about various evaluation techniques for retrieval in RAG pipelines, including precision, recall, and rank-aware metrics like MRR, MAP, and NDCG. Understand the importance of setting up a robust evaluation framework to guide development, ensuring your system meets specific use case requirements. Discover actionable insights to improve retrieval performance, from precision-recall benchmarking to using rank-aware metrics for post-processing. Get ready to enhance your LLM applications with practical tips and techniques for reliable and efficient retrieval.

Read more